Robotic

1 minute to read

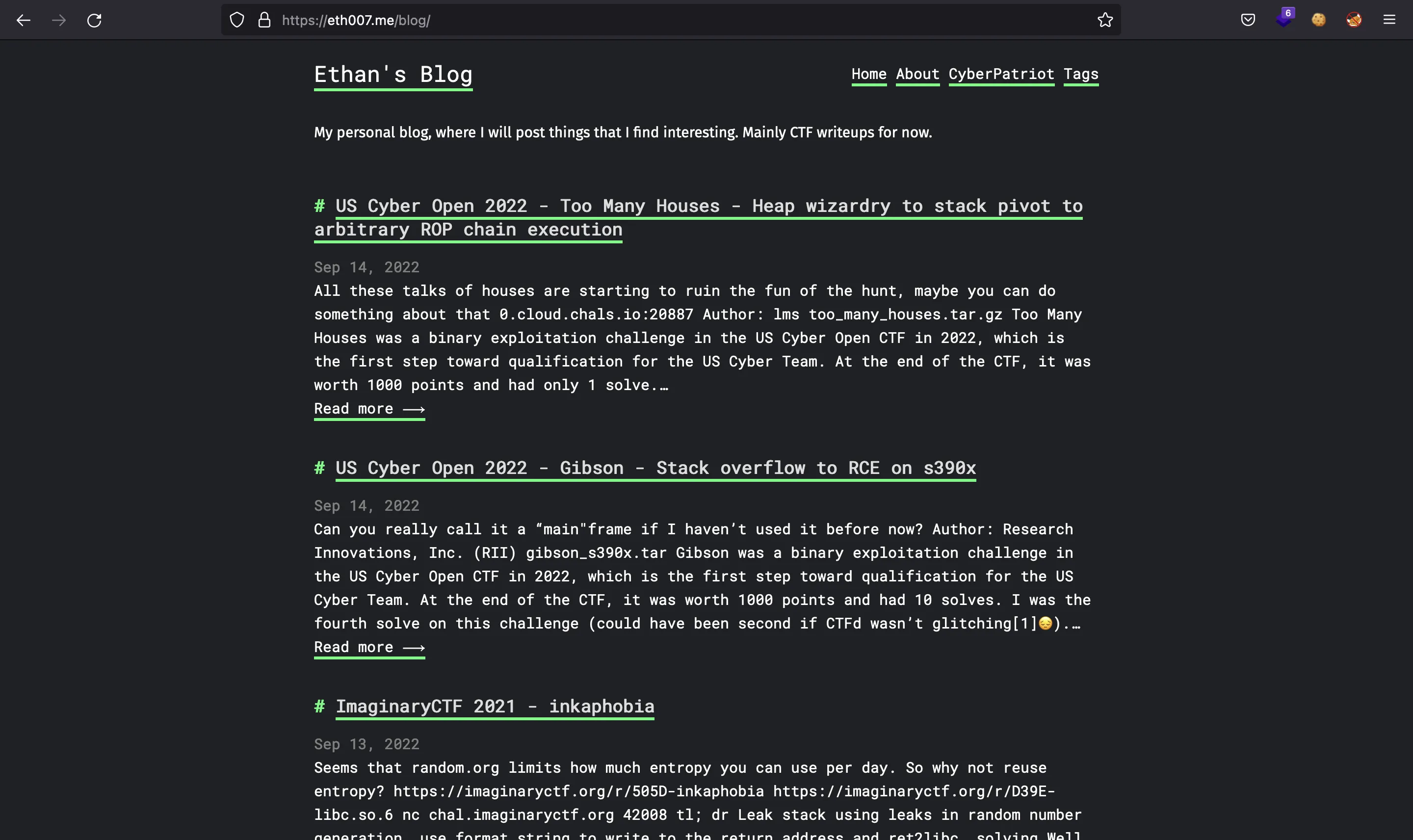

We are given this website (https://eth007.me/):

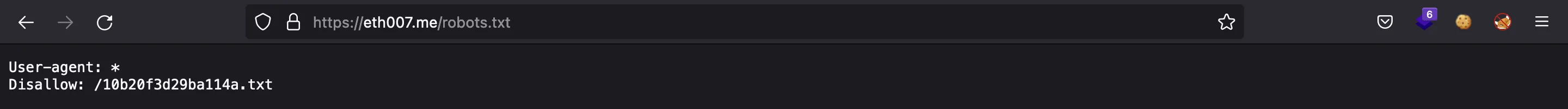

Since the name of the challenge is “Robotic”, we might think about robots.txt, which is a file used by web crawlers to index pages of a website in search engines like Google. There we have the resource:

Flag

If we follow that URL (which is marked as Disallow for web crawlers), we will see the flag:

$ curl https://eth007.me/10b20f3d29ba114a.txt

ictf{truly_not_a_robot}